MtxVec v6

With Delphi, C# or C++ quickly deliver 10x faster code with AI conversion to MtxVec

Comprehensive and fast numerical math library

Support for VS.NET, Embarcadero Delphi and C++ Builder

Statistical and DSP add-ons

Latest News

Accelerating software development with AI in 2026

Written on

As the AI revoluation is gaining momentum, we would like to share our recent AI tool setups. Many developers are still weary, that their code might be secretly absorbed by the big AI companies and this is not an unfounded concern. But it is possible to work with AI and maintain a reasonable level of code privacy and security also.

Numerical library for Delphi and .NET developers

Dew Research develops mathematical software for advanced scientific computing trusted by many customers. MtxVec for Delphi, C++ Builder or .NET is alternative for products like Matlab, LabView, OMatrix, SciLab, etc. We offer a high performance math library, statistics library and digital signal processing library (dsp library) for:

- Embarcadero/CodeGear Delphi and C++Builder numerical libraries and components

- Microsoft .NET components -- including Visual Studio add-ons and a .NET numerical library for C++, C#, and Visual Basic

Product Features

-

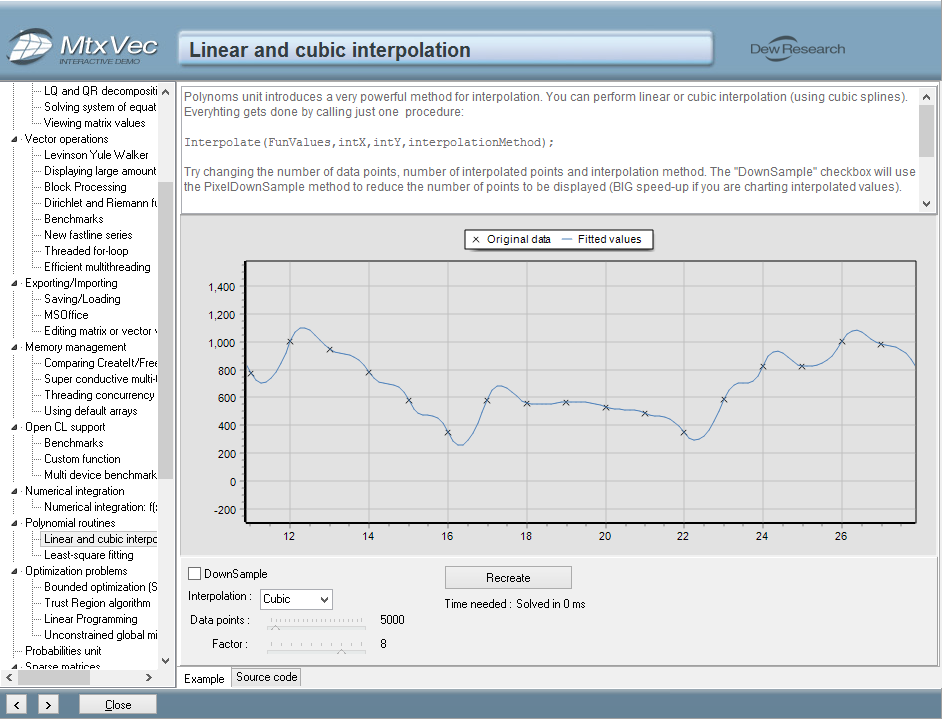

A comprehensive set of mathematical, signal processing and statistical functions

-

Substantial performance improvements of floating point math by exploiting the SSE4.2, AVX, AVX2, AVX512 instruction sets offered by modern CPUs.

-

Solutions based on it scale linearly with core count which makes it ideal for massively parallel systems.

-

Improved compactness and readability of code.

-

Support for native 64bit execution gives free way to memory hungry applications

-

Significantly shorter development times by protecting the developer from a wide range of possible errors.

-

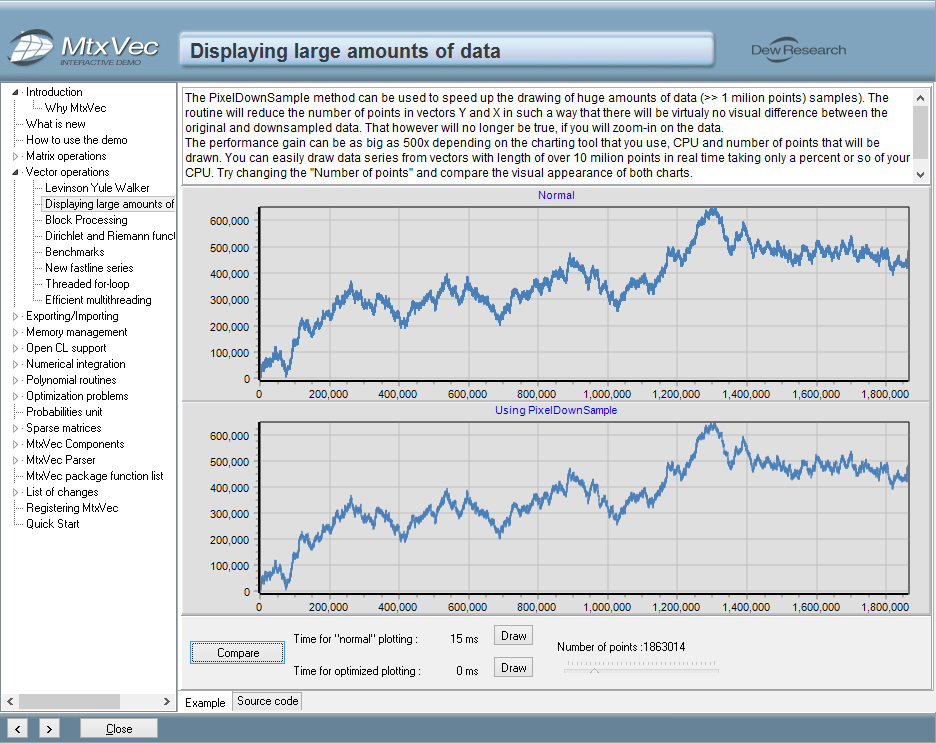

Direct integration with TeeChart© to simplify and speed up the charting.

-

No royalty fees for distribution of compiled binaries in products you develop

Optimized Functions

Performance Secrets

Code vectorization

The program achieves substantial performance improvements in floating point arithmetic by exploiting the CPU Streaming SIMD Extensions: SSE4.2, AVX, AVX2 and AVX512 instruction sets. (SIMD = Single Instruction Multiple Data.)